Note

You can download this example as a Jupyter notebook or start it in interactive mode.

Multi Investment Optimization#

In the following, we show how PyPSA can deal with multi-investment optimization, also known as multi-horizon optimization.

Here, the total set of snapshots is divided into investment periods. For the model, this translates into multi-indexed snapshots with the first level being the investment period and the second level the according time steps. In each investment period new asset may be added to the system. On the other hand assets may only operate as long as allowed by their lifetime.

In contrast to the ordinary optimisation, the following concepts have to be taken into account.

investment_periods-pypsa.Networkattribute. This is the set of periods which specify when new assets may be built. In the current implementation, these have to be the same as the first level values in thesnapshotsattribute.investment_period_weightings-pypsa.Networkattribute. These specify the weighting of each period in the objective function.build_year- general component attribute. A single asset may only be built when the build year is smaller or equal to the current investment period. For example, assets with a build year2029are considered in the investment period2030, but not in the period2025.lifetime- general component attribute. An asset is only considered in an investment period if present at the beginning of an investment period. For example, an asset with build year2029and lifetime30is considered in the investment period2055but not in the period2060.

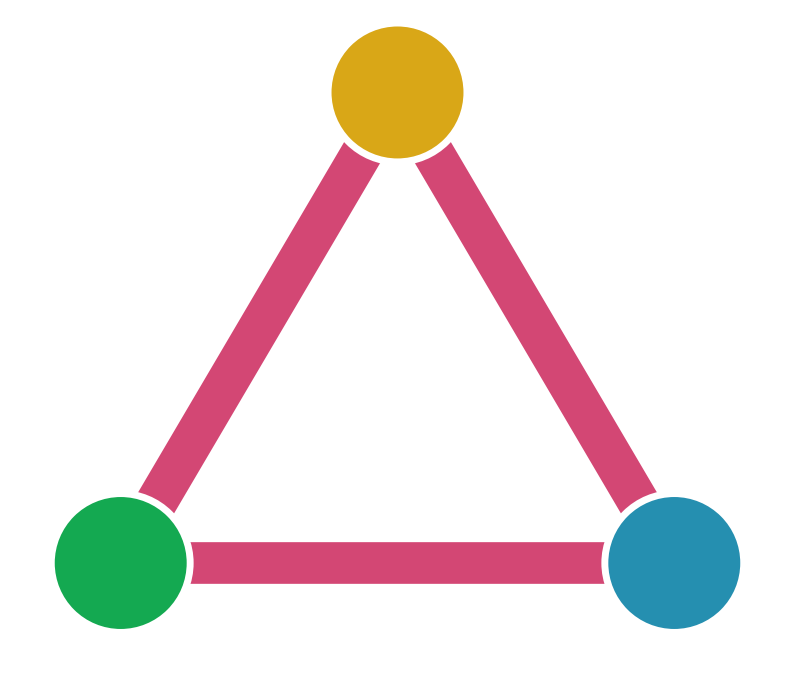

In the following, we set up a three node network with generators, lines and storages and run a optimisation covering the time span from 2020 to 2050 and each decade is one investment period.

[1]:

import pypsa

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

/home/docs/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/pypsa/networkclustering.py:16: UserWarning: The namespace `pypsa.networkclustering` is deprecated and will be removed in PyPSA v0.24. Please use `pypsa.clustering.spatial instead`.

warnings.warn(

We set up the network with investment periods and snapshots.

[2]:

n = pypsa.Network()

years = [2020, 2030, 2040, 2050]

freq = "24"

snapshots = pd.DatetimeIndex([])

for year in years:

period = pd.date_range(

start="{}-01-01 00:00".format(year),

freq="{}H".format(freq),

periods=8760 / float(freq),

)

snapshots = snapshots.append(period)

# convert to multiindex and assign to network

n.snapshots = pd.MultiIndex.from_arrays([snapshots.year, snapshots])

n.investment_periods = years

n.snapshot_weightings

/home/docs/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/pypsa/components.py:581: FutureWarning: Index.is_integer is deprecated. Use pandas.api.types.is_integer_dtype instead.

periods.is_integer()

[2]:

| objective | stores | generators | ||

|---|---|---|---|---|

| period | timestep | |||

| 2020 | 2020-01-01 | 1.0 | 1.0 | 1.0 |

| 2020-01-02 | 1.0 | 1.0 | 1.0 | |

| 2020-01-03 | 1.0 | 1.0 | 1.0 | |

| 2020-01-04 | 1.0 | 1.0 | 1.0 | |

| 2020-01-05 | 1.0 | 1.0 | 1.0 | |

| ... | ... | ... | ... | ... |

| 2050 | 2050-12-27 | 1.0 | 1.0 | 1.0 |

| 2050-12-28 | 1.0 | 1.0 | 1.0 | |

| 2050-12-29 | 1.0 | 1.0 | 1.0 | |

| 2050-12-30 | 1.0 | 1.0 | 1.0 | |

| 2050-12-31 | 1.0 | 1.0 | 1.0 |

1460 rows × 3 columns

[3]:

n.investment_periods

[3]:

Index([2020, 2030, 2040, 2050], dtype='int64')

Set the years and objective weighting per investment period. For the objective weighting, we consider a discount rate defined by

where \(r\) is the discount rate. For each period we sum up all discounts rates of the corresponding years which gives us the effective objective weighting.

[4]:

n.investment_period_weightings["years"] = list(np.diff(years)) + [10]

r = 0.01

T = 0

for period, nyears in n.investment_period_weightings.years.items():

discounts = [(1 / (1 + r) ** t) for t in range(T, T + nyears)]

n.investment_period_weightings.at[period, "objective"] = sum(discounts)

T += nyears

n.investment_period_weightings

[4]:

| objective | years | |

|---|---|---|

| 2020 | 9.566018 | 10 |

| 2030 | 8.659991 | 10 |

| 2040 | 7.839777 | 10 |

| 2050 | 7.097248 | 10 |

Add the components

[5]:

for i in range(3):

n.add("Bus", "bus {}".format(i))

# add three lines in a ring

n.add(

"Line",

"line 0->1",

bus0="bus 0",

bus1="bus 1",

)

n.add(

"Line",

"line 1->2",

bus0="bus 1",

bus1="bus 2",

capital_cost=10,

build_year=2030,

)

n.add(

"Line",

"line 2->0",

bus0="bus 2",

bus1="bus 0",

)

n.lines["x"] = 0.0001

n.lines["s_nom_extendable"] = True

[6]:

n.lines

[6]:

| attribute | bus0 | bus1 | type | x | r | g | b | s_nom | s_nom_extendable | s_nom_min | ... | v_ang_min | v_ang_max | sub_network | x_pu | r_pu | g_pu | b_pu | x_pu_eff | r_pu_eff | s_nom_opt |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Line | |||||||||||||||||||||

| line 0->1 | bus 0 | bus 1 | 0.0001 | 0.0 | 0.0 | 0.0 | 0.0 | True | 0.0 | ... | -inf | inf | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| line 1->2 | bus 1 | bus 2 | 0.0001 | 0.0 | 0.0 | 0.0 | 0.0 | True | 0.0 | ... | -inf | inf | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ||

| line 2->0 | bus 2 | bus 0 | 0.0001 | 0.0 | 0.0 | 0.0 | 0.0 | True | 0.0 | ... | -inf | inf | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

3 rows × 29 columns

[7]:

# add some generators

p_nom_max = pd.Series(

(np.random.uniform() for sn in range(len(n.snapshots))),

index=n.snapshots,

name="generator ext 2020",

)

# renewable (can operate 2020, 2030)

n.add(

"Generator",

"generator ext 0 2020",

bus="bus 0",

p_nom=50,

build_year=2020,

lifetime=20,

marginal_cost=2,

capital_cost=1,

p_max_pu=p_nom_max,

carrier="solar",

p_nom_extendable=True,

)

# can operate 2040, 2050

n.add(

"Generator",

"generator ext 0 2040",

bus="bus 0",

p_nom=50,

build_year=2040,

lifetime=11,

marginal_cost=25,

capital_cost=10,

carrier="OCGT",

p_nom_extendable=True,

)

# can operate in 2040

n.add(

"Generator",

"generator fix 1 2040",

bus="bus 1",

p_nom=50,

build_year=2040,

lifetime=10,

carrier="CCGT",

marginal_cost=20,

capital_cost=1,

)

n.generators

[7]:

| attribute | bus | control | type | p_nom | p_nom_extendable | p_nom_min | p_nom_max | p_min_pu | p_max_pu | p_set | ... | shut_down_cost | min_up_time | min_down_time | up_time_before | down_time_before | ramp_limit_up | ramp_limit_down | ramp_limit_start_up | ramp_limit_shut_down | p_nom_opt |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Generator | |||||||||||||||||||||

| generator ext 0 2020 | bus 0 | PQ | 50.0 | True | 0.0 | inf | 0.0 | 1.0 | 0.0 | ... | 0.0 | 0 | 0 | 1 | 0 | NaN | NaN | 1.0 | 1.0 | 0.0 | |

| generator ext 0 2040 | bus 0 | PQ | 50.0 | True | 0.0 | inf | 0.0 | 1.0 | 0.0 | ... | 0.0 | 0 | 0 | 1 | 0 | NaN | NaN | 1.0 | 1.0 | 0.0 | |

| generator fix 1 2040 | bus 1 | PQ | 50.0 | False | 0.0 | inf | 0.0 | 1.0 | 0.0 | ... | 0.0 | 0 | 0 | 1 | 0 | NaN | NaN | 1.0 | 1.0 | 0.0 |

3 rows × 31 columns

[8]:

n.add(

"StorageUnit",

"storageunit non-cyclic 2030",

bus="bus 2",

p_nom=0,

capital_cost=2,

build_year=2030,

lifetime=21,

cyclic_state_of_charge=False,

p_nom_extendable=False,

)

n.add(

"StorageUnit",

"storageunit periodic 2020",

bus="bus 2",

p_nom=0,

capital_cost=1,

build_year=2020,

lifetime=21,

cyclic_state_of_charge=True,

cyclic_state_of_charge_per_period=True,

p_nom_extendable=True,

)

n.storage_units

[8]:

| attribute | bus | control | type | p_nom | p_nom_extendable | p_nom_min | p_nom_max | p_min_pu | p_max_pu | p_set | ... | state_of_charge_initial_per_period | state_of_charge_set | cyclic_state_of_charge | cyclic_state_of_charge_per_period | max_hours | efficiency_store | efficiency_dispatch | standing_loss | inflow | p_nom_opt |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| StorageUnit | |||||||||||||||||||||

| storageunit non-cyclic 2030 | bus 2 | PQ | 0.0 | False | 0.0 | inf | -1.0 | 1.0 | 0.0 | ... | False | NaN | False | True | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | |

| storageunit periodic 2020 | bus 2 | PQ | 0.0 | True | 0.0 | inf | -1.0 | 1.0 | 0.0 | ... | False | NaN | True | True | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 |

2 rows × 29 columns

Add the load

[9]:

load_var = pd.Series(

100 * np.random.rand(len(n.snapshots)), index=n.snapshots, name="load"

)

n.add("Load", "load 2", bus="bus 2", p_set=load_var)

load_fix = pd.Series(75, index=n.snapshots, name="load")

n.add("Load", "load 1", bus="bus 1", p_set=load_fix)

Run the optimization

[10]:

n.loads_t.p_set

[10]:

| Load | load 2 | load 1 | |

|---|---|---|---|

| period | timestep | ||

| 2020 | 2020-01-01 | 46.042638 | 75.0 |

| 2020-01-02 | 67.603871 | 75.0 | |

| 2020-01-03 | 76.970092 | 75.0 | |

| 2020-01-04 | 73.892767 | 75.0 | |

| 2020-01-05 | 90.854083 | 75.0 | |

| ... | ... | ... | ... |

| 2050 | 2050-12-27 | 29.253072 | 75.0 |

| 2050-12-28 | 4.395190 | 75.0 | |

| 2050-12-29 | 29.962454 | 75.0 | |

| 2050-12-30 | 27.887712 | 75.0 | |

| 2050-12-31 | 82.403469 | 75.0 |

1460 rows × 2 columns

[11]:

n.optimize(multi_investment_periods=True)

WARNING:pypsa.components:The following lines have zero r, which could break the linear load flow:

Index(['line 0->1', 'line 1->2', 'line 2->0'], dtype='object', name='Line')

WARNING:pypsa.components:The following lines have zero r, which could break the linear load flow:

Index(['line 0->1', 'line 1->2', 'line 2->0'], dtype='object', name='Line')

INFO:linopy.model: Solve linear problem using Glpk solver

INFO:linopy.io:Writing objective.

Writing constraints.: 100%|██████████| 27/27 [00:00<00:00, 89.54it/s]

Writing continuous variables.: 100%|██████████| 10/10 [00:00<00:00, 260.95it/s]

INFO:linopy.io: Writing time: 0.35s

GLPSOL--GLPK LP/MIP Solver 5.0

Parameter(s) specified in the command line:

--lp /tmp/linopy-problem-n7fq24_7.lp --output /tmp/linopy-solve-n77y76tk.sol

Reading problem data from '/tmp/linopy-problem-n7fq24_7.lp'...

35776 rows, 12417 columns, 67163 non-zeros

188727 lines were read

GLPK Simplex Optimizer 5.0

35776 rows, 12417 columns, 67163 non-zeros

Preprocessing...

18250 rows, 8401 columns, 42705 non-zeros

Scaling...

A: min|aij| = 1.846e-03 max|aij| = 1.000e+01 ratio = 5.417e+03

GM: min|aij| = 4.954e-01 max|aij| = 2.019e+00 ratio = 4.075e+00

EQ: min|aij| = 2.516e-01 max|aij| = 1.000e+00 ratio = 3.975e+00

Constructing initial basis...

Size of triangular part is 17882

0: obj = -5.236759264e+05 inf = 2.657e+07 (5475)

Perturbing LP to avoid stalling [3352]...

6905: obj = 1.906527712e+07 inf = 7.544e+03 (162) 66

7191: obj = 1.921512521e+07 inf = 4.099e-08 (0) 3

Removing LP perturbation [8257]...

* 8257: obj = 1.796166447e+07 inf = 1.164e-12 (0) 8

OPTIMAL LP SOLUTION FOUND

Time used: 6.1 secs

Memory used: 27.0 Mb (28344637 bytes)

Writing basic solution to '/tmp/linopy-solve-n77y76tk.sol'...

INFO:linopy.constants: Optimization successful:

Status: ok

Termination condition: optimal

Solution: 12417 primals, 35776 duals

Objective: 1.80e+07

Solver model: not available

Solver message: optimal

/home/docs/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/linopy/common.py:97: UserWarning: Coordinates across variables not equal. Perform outer join.

warn("Coordinates across variables not equal. Perform outer join.", UserWarning)

INFO:pypsa.optimization.optimize:The shadow-prices of the constraints Kirchhoff-Voltage-Law were not assigned to the network.

[11]:

('ok', 'optimal')

[12]:

c = "Generator"

df = pd.concat(

{

period: n.get_active_assets(c, period) * n.df(c).p_nom_opt

for period in n.investment_periods

},

axis=1,

)

df.T.plot.bar(

stacked=True,

edgecolor="white",

width=1,

ylabel="Capacity",

xlabel="Investment Period",

rot=0,

figsize=(10, 5),

)

plt.tight_layout()

[13]:

df = n.generators_t.p.sum(level=0).T

df.T.plot.bar(

stacked=True,

edgecolor="white",

width=1,

ylabel="Generation",

xlabel="Investment Period",

rot=0,

figsize=(10, 5),

)

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

Cell In[13], line 1

----> 1 df = n.generators_t.p.sum(level=0).T

2 df.T.plot.bar(

3 stacked=True,

4 edgecolor="white",

(...)

9 figsize=(10, 5),

10 )

File ~/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/pandas/core/generic.py:11512, in NDFrame._add_numeric_operations.<locals>.sum(self, axis, skipna, numeric_only, min_count, **kwargs)

11493 @doc( # type: ignore[misc]

11494 _num_doc,

11495 desc="Return the sum of the values over the requested axis.\n\n"

(...)

11510 **kwargs,

11511 ):

> 11512 return NDFrame.sum(self, axis, skipna, numeric_only, min_count, **kwargs)

File ~/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/pandas/core/generic.py:11280, in NDFrame.sum(self, axis, skipna, numeric_only, min_count, **kwargs)

11272 def sum(

11273 self,

11274 axis: Axis | None = None,

(...)

11278 **kwargs,

11279 ):

> 11280 return self._min_count_stat_function(

11281 "sum", nanops.nansum, axis, skipna, numeric_only, min_count, **kwargs

11282 )

File ~/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/pandas/core/generic.py:11252, in NDFrame._min_count_stat_function(self, name, func, axis, skipna, numeric_only, min_count, **kwargs)

11240 @final

11241 def _min_count_stat_function(

11242 self,

(...)

11249 **kwargs,

11250 ):

11251 if name == "sum":

> 11252 nv.validate_sum((), kwargs)

11253 elif name == "prod":

11254 nv.validate_prod((), kwargs)

File ~/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/pandas/compat/numpy/function.py:82, in CompatValidator.__call__(self, args, kwargs, fname, max_fname_arg_count, method)

80 validate_kwargs(fname, kwargs, self.defaults)

81 elif method == "both":

---> 82 validate_args_and_kwargs(

83 fname, args, kwargs, max_fname_arg_count, self.defaults

84 )

85 else:

86 raise ValueError(f"invalid validation method '{method}'")

File ~/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/pandas/util/_validators.py:221, in validate_args_and_kwargs(fname, args, kwargs, max_fname_arg_count, compat_args)

216 raise TypeError(

217 f"{fname}() got multiple values for keyword argument '{key}'"

218 )

220 kwargs.update(args_dict)

--> 221 validate_kwargs(fname, kwargs, compat_args)

File ~/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/pandas/util/_validators.py:162, in validate_kwargs(fname, kwargs, compat_args)

140 """

141 Checks whether parameters passed to the **kwargs argument in a

142 function `fname` are valid parameters as specified in `*compat_args`

(...)

159 map to the default values specified in `compat_args`

160 """

161 kwds = kwargs.copy()

--> 162 _check_for_invalid_keys(fname, kwargs, compat_args)

163 _check_for_default_values(fname, kwds, compat_args)

File ~/checkouts/readthedocs.org/user_builds/pypsa/conda/v0.24.0/lib/python3.11/site-packages/pandas/util/_validators.py:136, in _check_for_invalid_keys(fname, kwargs, compat_args)

134 if diff:

135 bad_arg = list(diff)[0]

--> 136 raise TypeError(f"{fname}() got an unexpected keyword argument '{bad_arg}'")

TypeError: sum() got an unexpected keyword argument 'level'